VTA Deep Learning Accelerator

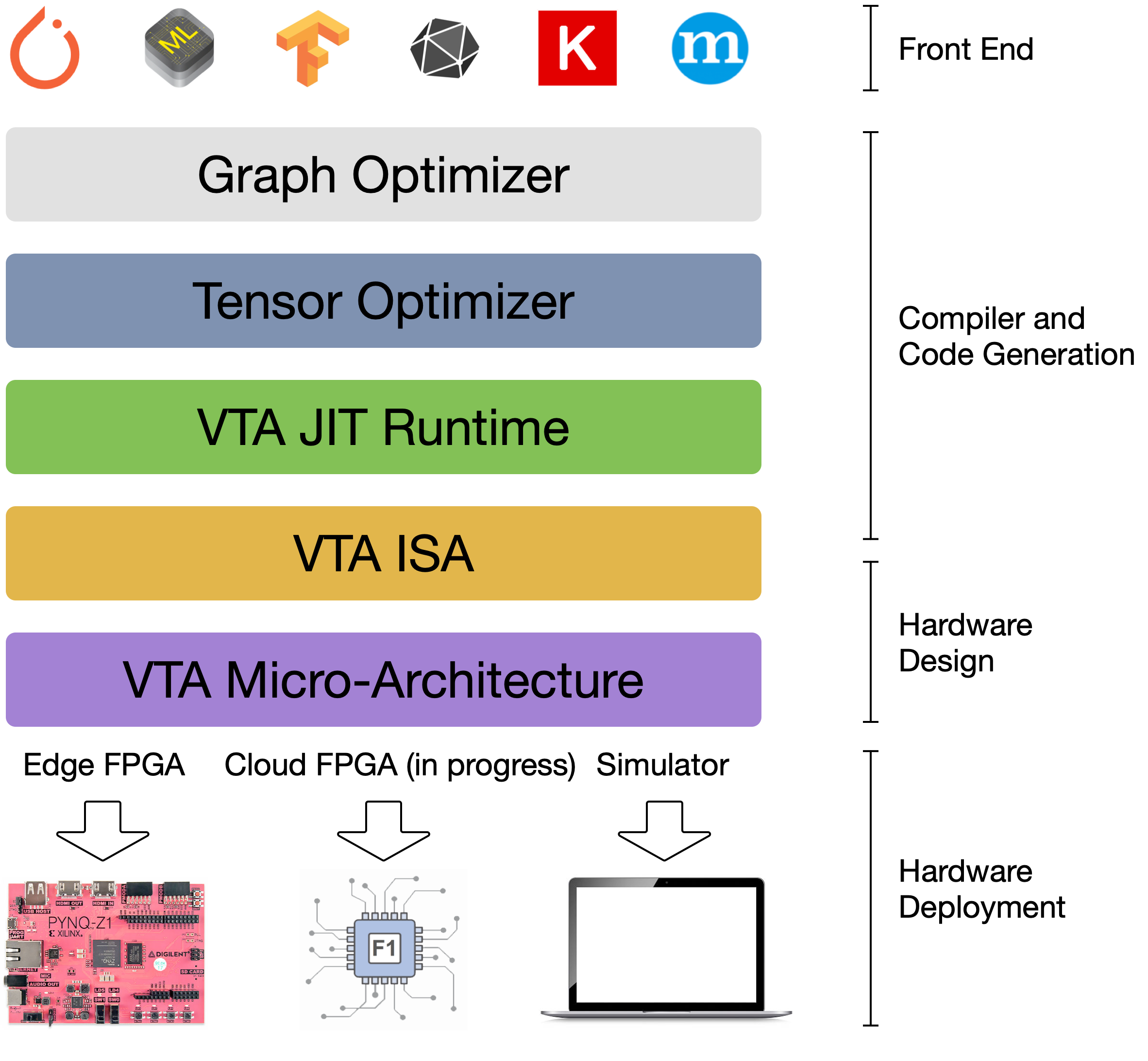

The Versatile Tensor Accelerator (VTA) is an extension of the TVM framework designed to advance deep learning and hardware innovation. VTA is a programmable accelerator that exposes a RISC-like programming abstraction to describe compute and memory operations at the tensor level. We designed VTA to expose the most salient and common characteristics of mainstream deep learning accelerators, such as tensor operations, DMA load/stores, and explicit compute/memory arbitration.

Checkout our blogpost, techreport VTA: An Open Hardware-Software Stack for Deep Learning, tutorials and guides and latest updates at tvm.ai.

People

Tianqi Chen

Assistant Professor - CMU

Thierry Moreau

Affiliate Assistant Professor

Arvind Krishnamurthy

Professor

Luis Ceze

Professor

Carlos Guestrin

Professor - Stanford